Learning to Track at 100 FPS with Deep Regression Networks

Abstract

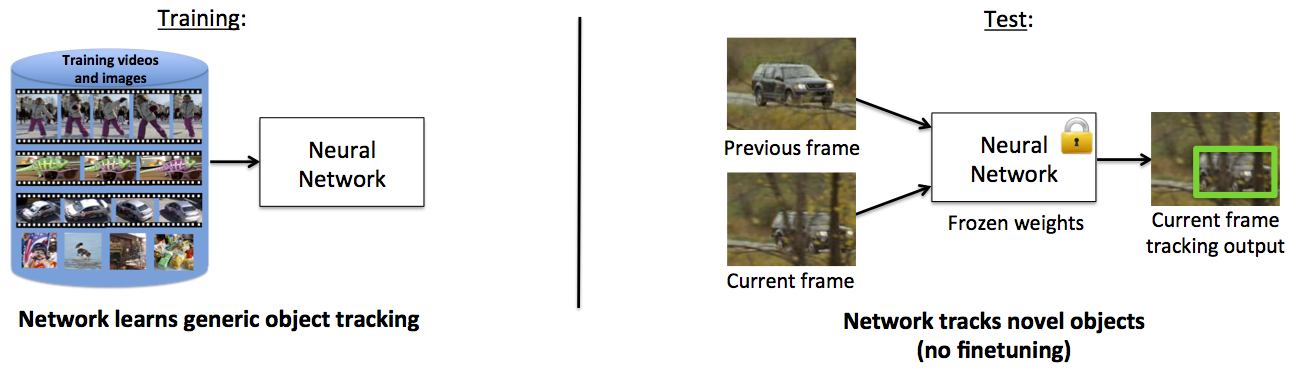

Machine learning techniques are often used in computer vision due to their ability to leverage large amounts of training data to improve performance. Unfortunately, most generic object trackers are still trained from scratch online and do not benefit from the large number of videos that are readily available for offline training. We propose a method for using neural networks to track generic objects in a way that allows them to improve performance by training on labeled videos. Previous attempts to use neural networks for tracking are very slow to run and not practical for real-time applications. In contrast, our tracker uses a simple feed-forward network with no online training required, allowing our tracker to run at 100 fps during test time. Our tracker trains from both labeled video as well as a large collection of images, which helps prevent overfitting. The tracker learns generic object motion and can be used to track novel objects that do not appear in the training set. We test our network on a standard tracking benchmark to demonstrate our tracker's state-of-the-art performance. Our network learns to track generic objects in real-time as they move throughout the world.

Publications

Learning to Track at 100 FPS with Deep Regression NetworksDavid Held, Sebastian Thrun, Silvio Savarese

European Conference on Computer Vision (ECCV), 2016 (In press)

[Full Paper]

[Supplement]

Code

The C++ code for the neural-network tracker is available on github hereIf you have any further questions about the tracker, please email me at davheld -at- cs -dot- stanford -dot- edu.

Videos

The performance of GOTURN (including failure cases) can be seen in this video:Bibtex

@inproceedings{held2016learning,

title={Learning to Track at 100 FPS with Deep Regression Networks},

author={Held, David and Thrun, Sebastian and Savarese, Silvio},

booktitle = {European Conference Computer Vision (ECCV)},

year = {2016}

}