Combining 3D Shape, Color, and Motion for Robust Velocity Estimation

Abstract

Although object tracking has been studied for decades, real-time tracking algorithms often suffer from low accuracy and poor robustness when confronted with difficult, real-world data. We present a tracker that combines 3D shape, color (when available), and motion cues to accurately estimate the velocity of moving objects in real-time. Our tracker allocates computational effort based on the shape of the posterior distribution. Starting with a coarse approximation to the posterior, the tracker successively refines this distribution, increasing in tracking accuracy over time. The tracker can thus be run for any amount of time, after which the current approximation to the posterior is returned. Even at a minimum runtime of 0.37 milliseconds, our method outperforms all of the baseline methods of similar speed by at least 25%. If our tracker is allowed to run for longer, the accuracy continues to improve, and it continues to outperform all baseline methods. Our tracker is thus anytime, allowing the speed or accuracy to be optimized based on the needs of the application.Publications

Robust Real-Time Tracking Combining 3D Shape, Color, and MotionDavid Held, Jesse Levinson, Sebastian Thrun, Silvio Savarese.

International Journal of Robotics Research (IJRR), 2015

[Full paper]

Combining 3D Shape, Color, and Motion for Robust Anytime Tracking.

David Held, Jesse Levinson, Sebastian Thrun, Silvio Savarese.

Robotics: Science and Systems (RSS), 2014

[Full paper]

[Presentation]

[Poster - pptx]

[Poster - pdf]

Precision Tracking with Sparse 3D and Dense Color 2D Data. Best Vision Paper Finalist.

David Held, Jesse Levinson, Sebastian Thrun

International Conference on Robotics and Automation (ICRA), 2013

[Full paper]

Code

The C++ code for the velocity estimation method is available on github hereTo run a quick test on the code, you will also need to download this file with test data: Test data

If you have any further questions about the method, please email me at davheld -at- cs -dot- stanford -dot- edu.

Videos

Using the velocity estimates produced by our tracker, we can accumulate points for a bicyclist as it bikes by:

This is the bicyclist that we are tracking in the above video. Notice that the bicyclist also undergoes rotation as it bikes around the curve.

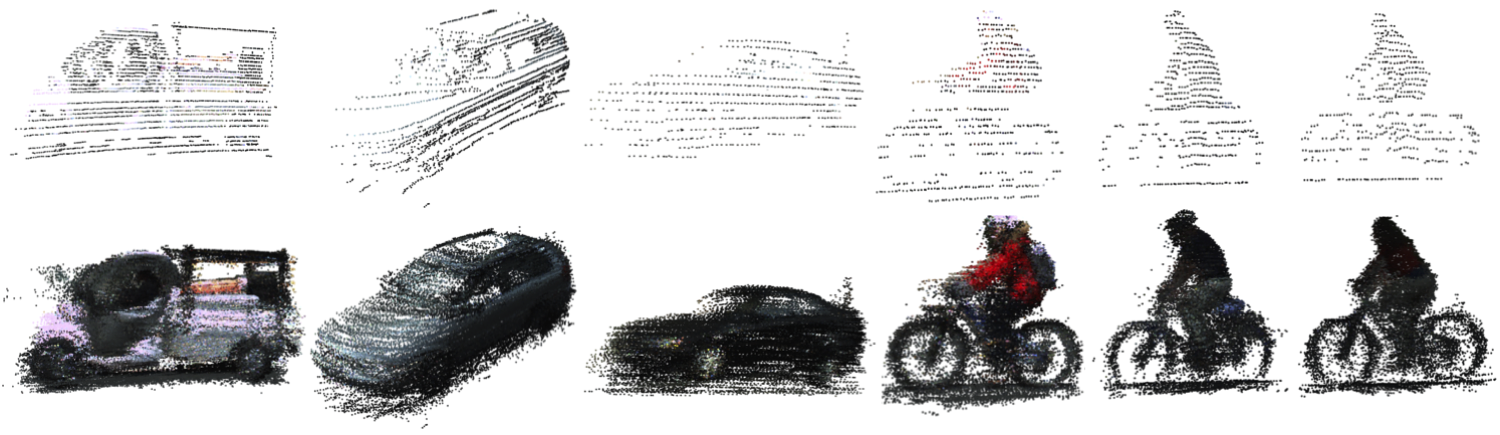

Here are some other models that are built using the frame-to-frame velocity estimates produced by our tracker. The top row shows the single frame with the most points observed by our sensor. The bottom row shows the accumulated model produced by our tracker.

We can optionally include color in our tracker as well. To do this, we first learn a probability distribution of how much the color of a given point will change over time (from different viewing angles, as shadows change, reflectance, etc). Using the 3D information obtained from our laser and the information obtained from our INS system about our ego motion (i.e. the motion from our camera), we can track a single point as we move past it. We can then observe how the color of this point changes over time, and we can learn the probability of observing any color change over a single frame. This video shows one example point that we are tracking to learn how its color changes as we move past it.

Bibtex

@INPROCEEDINGS{Held-RSS-14,

AUTHOR = {David Held AND Jesse Levinson AND Sebastian Thrun AND Silvio Savarese},

TITLE = {Combining 3D Shape, Color, and Motion for Robust Anytime Tracking},

BOOKTITLE = {Proceedings of Robotics: Science and Systems},

YEAR = {2014},

ADDRESS = {Berkeley, USA},

MONTH = {July}

}